Recognize Drawings using ML Kit

Using ML Kit’s Digital Ink Recognition API, we can recognize handwritten text or sketches made by the user on a screen. I made a simple app to play with this API as you can see in the video below, you can draw anything and the app detects it in real time. These guesses are pretty accurate and I am quite impressed, to be honest.

In this blog post, we will be building this app.

Let’s start

We need the following dependency to get started.

We have to download the model that would be used to recognize the sketches and once it has been downloaded, it can be used even without the Internet. The size of the model is around 20 MB. In this example, I am using the AutoDraw model to detect sketches made by the user. The API supports 300+ language models and has shape and emoji classifiers too.

In the above code snippet, we initialized the modelIdentifier using a tag for the AutoDraw model. You can use any identifier of your choice. Then we use this modelIdentifier to download our model.

Before we use the model to recognize what the user is drawing, we need to create a canvas for the user to draw stuff. We can make a custom view by extending the View class and overriding the onTouchEvent method, the following code snippet shows how we can override the onTouchEvent method of our custom view.

In the above code snippet, we are drawing the path that the user’s finger is traversing on the screen to a canvas. We need to send all the coordinates of this path to the Digital Ink Recognition API. This is what the addNewTouchEvent method does.

You can call the above method from your custom view every time there is a new event. Ink object is a vector representation of the user’s drawing which contains the timestamp and coordinates of each stroke that the user has made. In the code snippet above you might have noticed that a stroke starts when the user puts his/her finger on the screen and ends when the finger is lifted. This is the reason why strokeBuilder is reinitialized whenever there is an ACTION_UP event so that the next time there is a new stroke it can be added to the strokeBuilder.

This means that we are adding all the coordinates with their timestamps in strokeBuilder when the user lifts his/her finger and whenever the user starts drawing again, a new stroke with all the relevant information is added to the strokeBuilder again. Then all these strokes are added to the inkBuilder. It is this inkBuilder which constructs the ink that gets passed on to perform recognition.

Now that we have downloaded our model and have the path of the drawing with us, we can finally use this information to recognize it. The following code snippet does exactly that.

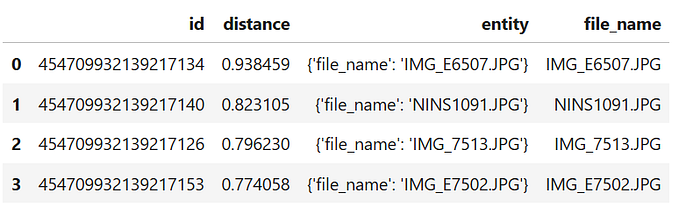

The result object that is returned in the success listener contains a list of objects that can be the sketch drawn by the user. Since we are using a shape classifier, all these objects also have a score associated with them but the first object in the list is the result that the API thinks is the most accurate.

You might have noticed that in the video I was clearing the screen and making a new sketch. If you also want to do that you can simply reinitialize the inkBuilder.

That’s it, you have a fully functional app now. I remember a few years ago when I was trying to build a similar app it required me to write a lot of code for something which can be done in a few lines of code now. ML Kit libraries have simplified a lot of difficult tasks for mobile developers.

The GitHub repository of the project has been linked below, feel free to play with the app.

If you have any kind of feedback, feel free to connect with me on Twitter.