On-Device Machine Learning In Android: Frameworks and Ecosystem

Tools And Libraries For On-Device ML In Android

Machine learning has long-celebrated history in desktop and GPU-environments mainly due to its heavy need of computing power and runtime. As models in each sector of ML are growing exponentially in terms of number of parameters, the need of smaller-lightweight models that run on battery-powered, low-compute devices has also increased. A smartphone is one of most important consumer electronic as it occupies a major part of our digital life due to its wide availability and features for B2C services.

Deploying machine learning models on mobile-devices is important for organizations to roll out specific features reaching to consumers, such as advanced image processing, NLP services, chatbots or featured dependent on sensor data. In the process of deploying such ML features, an organization may have to face several challenges which aren’t prevalent in the desktop-world as a mature ecosystem exists there.

In this article, we’ll explore the on-device ML landscape specifically for the Android ecosystem.

On-Device Vs. Remote/Server Inference

- Inference time: The time taken to make a prediction by a ML models depends largely on the size of the inputs and the underlying hardware on which it runs. Server-side inference may be faster if the hardware is optimized for the task whereas on-device inference should be a go-to option if the model is smaller and other challenges (mentioned above) do not cause hinderance in the development.

- Dependence on internet: Server-side inference would require the mobile-device to have a persistent internet connection across the round-trip to the server. Larger inputs such as videos or data files may also have unpredictable upload/download speeds due to the internet dependency.

- App size: On-device models have to be stored within the app’s internal storage which increases its overall package size. An obvious solution is to download the model with user’s consent, once the app is installed, before making inferences.

- Easy of implementation: Inferencing ML models at the server-side i.e. on desktop environments is easier due to the abundance of packages and runtime. A Python developer may write a function which infers a ML model on the server, whereas skills are required to deploy a model on-device.

Challenges In Mobile Deployment

- Restricted ecosystem of tools: Not all ML frameworks support mobile-deployment or have SDKs for mobile-platforms. Popular frameworks such as TensorFlow, PyTorch and ONNX do have dedicated SDKs for iOS and Android which makes deployment easier for app developers. ONNX is a representation format for ML models supported by multiple other frameworks, which will be discussed in further sections of the blog.

- Constrained compute power: One of first challenges that a developer faces is the larger demand of compute by the ML model. This is not an issue if the model is relatively smaller, not exceeding a few MBs in size. If the size of the model goes above 75–100MB, the compute required to store and use those parameters increases greatly. The Android OS may kill the application if it requests resources above a certain limit, which greatly disrupts user experience.

- Lack of pre/post-processing tools: After the successful conversion of the model to a mobile-compatible format, a challenge that app developers may face is to build a pre/post-processing pipeline for the model. In desktop-environments, Python packages and functions they provide aid in building pre/post-processing pipelines. Replicating the working of those functions by introducing other packages in the app’s build may turn out to be a difficult task, especially if the model requires any special operation.

ML Services For Android

MLKit

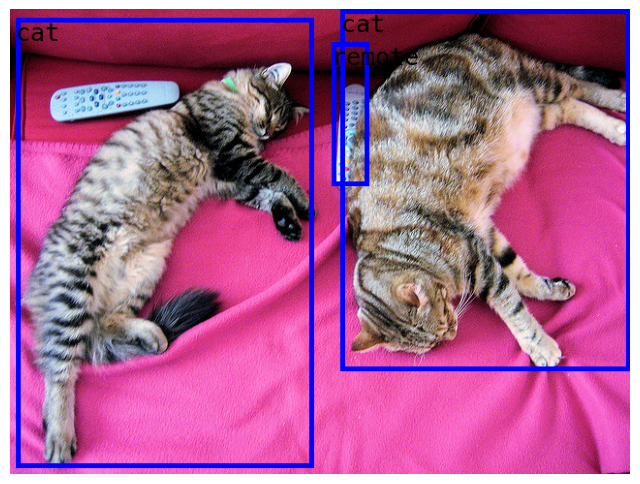

MLKit is suite of tools offered by Google developers to perform common machine learning tasks, such as optical character recognition (OCR), language detection and translation, object detection and face detection on Android and iOS devices. For a native Android developer with limited or no prior knowledge of ML, MLKit is the most well-documented and easy-to-integrate package of tools.

In MLKit’s exhaustive documentation, each use-case is described conceptually followed by their Android or iOS integration. Working with multiple use-cases also shouldn’t be a difficult task, as the API is consistent and intuitive across all data modalities (like processing image, audio or video data).

Mediapipe

Mediapipe is another Google-service which provides ML solutions that are highly customizable. Common services like image segmentation, classification, face (landmark) recognition, text classification are provided through an API similar to MLKit.

Mediapipe also provides an easy-to-use interface for customizing pretrained models with user-given data. As opposed to MLKit, Mediapipe solutions are also offered as Python packages and JS libraries for the web.

TensorFlow Lite

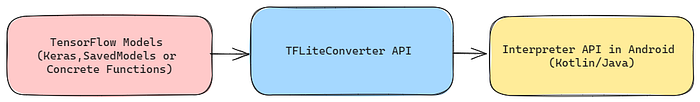

TensorFlow is a machine learning framework which allows developers train deep learning models, process large amounts of data and deploy models across multiple platforms. The framework is supported in multiple languages, with its core written in C++ and CUDA and bindings in Python, Java, C++ and JS. Models built with TensorFlow can be saved for deployment or training in multiple formats such as the SavedModel or the Keras .h5 or .keras formats.

TensorFlow, as a framework, might seem heavy-weight, as it contains multiple operations and engines for auto-differentiation and building computation graphs which are mostly needed while training the model and not while inferencing or running it.

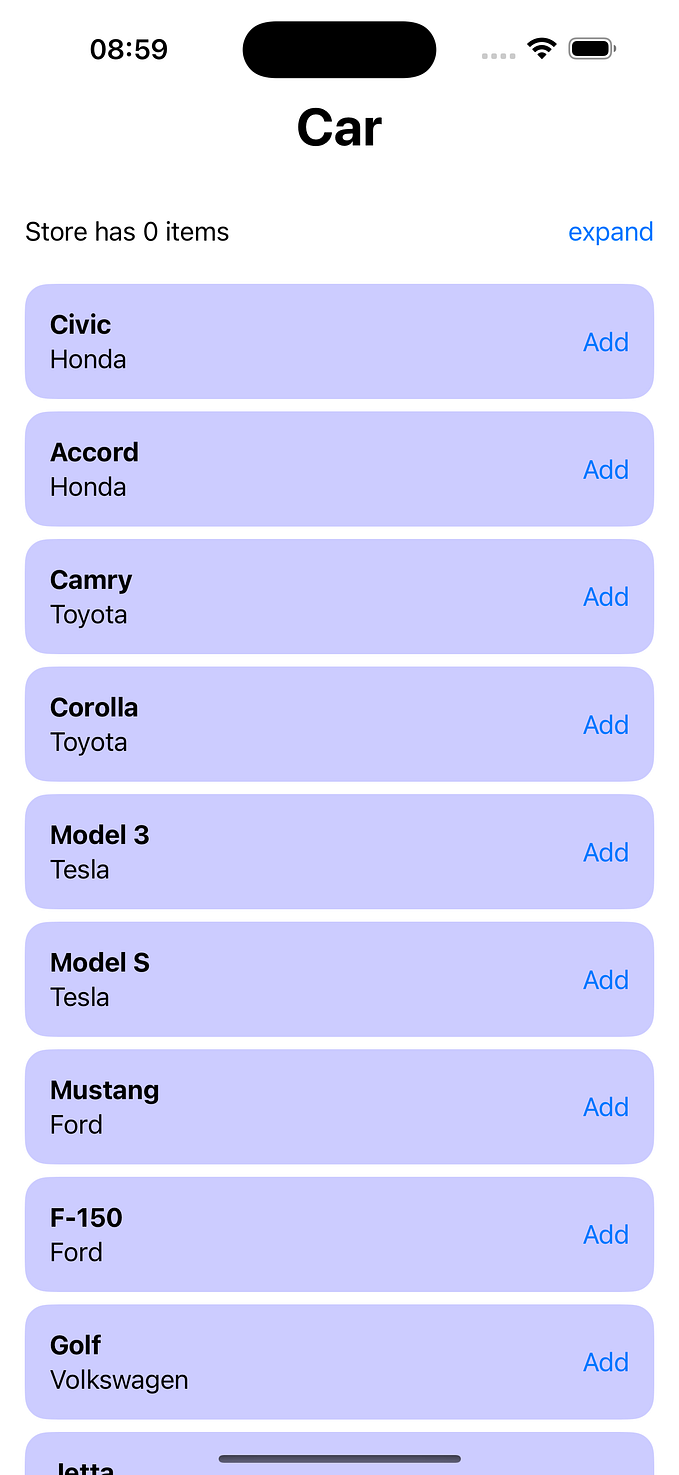

TensorFlow Lite is a specialized runtime which provides a set of reduced operations designed to execute deep learning models efficiently. It uses its own serialization format, .tflite , which can be obtained by using the TFLiteConverter utility. The TFLiteConverter API can convert SavedModel , .h5 or .keras or concrete functions to the TFLite format.

Once the model is converted to the TFLite format, it can be used effectively in Android apps. TensorFlow’s Maven package allows execution of TFLite models with APIs like Interpreter .

TensorFlow Lite does support on-device training, meaning models can be

TensorFlow Lite Support Library

The TFLite Support Library provides helper functions around the main TFLite library such as creating Tensor from bitmaps, transformations with TensorProcessor (with operations like CastOp , ResizeOp and NormalizeOp). They also provide functions for reading bitmaps and audio clips easily.

TensorFlow Lite Task Library

Much like MLKit and Mediapipe, the TFLite Task Library provides a set of clean APIs for common ML problems like image/text/audio classification, BERT question answering. They do have a document on how to build custom Tasks APIs which includes the use of JNI and C++.

ONNX and onnxruntime

ONNX, the Open Neural Network Exchange format, is a widely accepted serialization format for ML models including neural networks. ONNX tries to solve the problem of fragmentation and interoperability that arises mainly because of different model saving formats used by different ML frameworks. TensorFlow, PyTorch, scikit-learn and other popular ML frameworks support conversion to ONNX which can then be executed on a wide-range of platforms offered by onnxruntime.

ONNX runtime’s Maven package provides APIs to execute .ort or .onnx models, the API being quite similar to TFLite. We create tensors with predefined input shapes and then have an OrtSession run the model and return back the results.

Flower for Federated Learning

Federating learning is a technique which distributes training on multiple clients such the training data remains with the client, instead of travelling to a central server, mainly for data-privacy reasons. A copy of the model is downloaded from the server which is fine-tuned on the data available on-device and is sent back to the server. The server then aggregates the results of model training received from the clients and makes an update to its central model.

Flower provides the services needed to handle client-server communication and transmission of model/parameters over the network, utilizing gRPC under the hood. They do have an Android example which trains a CIFAR10 model in federated style.

Android and Kotlin Tools

Camera2/CameraX for Computer Vision Apps

Developers building apps which use ML models on camera frames, learning how to use CameraX (or the Camera2) API might be beneficial as it provides more control on the inputs given to the model. CameraX’s ImageAnalyzer provides an analyze method which can be used to call ML models and feed them raw frames as Bitmap .

For instance, we need to call MLKit’sfaceDetector.detect in the analyze method of ImageAnalyzer to detect faces in real-time from the camera feed. Moreover, if we wish to draw bounding boxes or segmentation masks above the camera preview, using a custom SurfaceView might be helpful as it provides a Canvas object to draw on the view.

In Compose, the functionality of a SurfaceView can be implemented with the onDrawBehind callback available in Modifier .

JNI and C++ Integration in Android Apps

Integrating C++ in pre/post-processing pipelines can help boost performance for inferring ML models. In case of simple yet unique pre/post-processing techniques, writing functions in C++ and JNI methods for interacting with Kotlin code can be easier, but the implementation becomes difficult as the complexity of the pre/post-processing technique increases.

MLKit, Mediapipe and TF Lite have JNI wrappers around their C++ codebases which perform inference on the given model. This eliminates the need to write a Java-first SDK and boosts performance.

multik

multik is a library for working with multi-dimensional arrays, which could be useful while reshaping arrays in pre/post-processing or casting to another datatype. It also supports aggregations, math operations and multi-axis indexing and slicing.

kotlindl

kotlindl provides a high-level API for building and training deep learnings models similar to Keras.

Coroutines

Coroutines are lightweight threads which provide an intuitive concurrency model in Kotlin codebases to perform blocking IO or CPU intensive tasks. Downloading models, pre/post-processing data or inferring the model are blocking tasks, making them good candidates for use with Coroutines.

Conclusion

This blog will be updated at regular intervals with new tools for implementing ML in Android apps. You may provide suggestions or identify inconsistencies in the blog, and express them in the comments below! On-device ML has a bright future ahead, do follow for exciting tutorials and updates!

Thanks for reading, and have a nice day ahead!