Java Streams vs. Kotlin Sequences

Since Java streams are available to be used within Kotlin code, a common question among developers who use Kotlin for backend development is whether to use streams or sequences. I also included some surprises that affect the way we work with sequences and structure our code.

This article analyzes both options from 3 perspectives to determine their strengths and weaknesses:

- Null Safety

- Readability & Simplicity

- Performance Overhead

Null Safety

Using Java streams within Kotlin code results in platform types when using non-primitive values. For example, the following evaluates to List<Person!> instead of List<Person> so it becomes less strongly typed:

people.stream()

.filter { it.age > 18 }

.toList() // evaluates to List<Person!>When dealing with values that might not be present, sequences return nullable types whereas streams wrap the result in an Optional:

// Sequence

val nameOfAdultWithLongName = people.asSequence()

...

.find { it.name.length > 5 }

?.name// Stream

val nameOfAdultWithLongName = people.stream()

...

.filter { it.name.length > 5 }

.findAny()

.get() // unsafe unwrapping of Optional

.name

Although we could use orElse(null) in the example above, the compiler doesn’t force us to use Optional wrappers correctly. Even if we did use orElse(null), the resulting value would be a platform type so the compiler doesn’t enforce safe usage. This pattern has bitten us several times as a runtime exception will be thrown with the stream version if no person is found. Sequences, however, use Kotlin nullable types so safe usage is enforced at compile time.

Therefore sequences are safer from a null-safety perspective.

Readability & Simplicity

Using collectors for terminal operations makes streams more verbose:

// Sequence

val adultsByGender = people.asSequence()

.filter { it.age >= 18 }

.groupBy { it.gender }// Stream

val adultsByGender = people.stream()

.filter { it.age >= 18 }

.collect(Collectors.groupingBy<Person, Gender> { it.gender })

Although the above isn’t overly complex, it took me several minutes to get the version with streams to compile because the generic types were required. I expected a map from Gender to List<Person> so I struggled to specify the types correctly (notice how the order is also reversed). I had to pull up the signature of the collect & groupingBy functions to see how they’re connected before I finally got it to compile.

Sequences can be shorter due to specialized actions:

// Sequence

people.asSequence()

.mapNotNull { it.testScore } // map & filter in 1 action

...// Stream

people.stream()

.map { it.testScore }

.filter { it != null }

...

Sequences have cleaner aggregates:

// Sequence

val nameOfOldestHealthyPerson = people.asSequence()

.filter { it.isHealthy() }

.maxBy { it.age }

?.name// Stream

val nameOfOldestHealthyPerson = people.stream()

.filter { it.isHealthy() }

.max(Comparator.comparing(Person::age))

.get()

.name

Streams make this example much clunkier since I needed to provide a comparator (there’s also a sneaky defect there). Additionally, the safe-call and Elvis operators don’t work with Optional wrappers resulting in more verbose code with streams.

Therefore sequences are shorter, simpler, and result in more idiomatic code.

Performance Overhead

There are 3 main aspects that affect the performance overhead of sequences and streams:

- Primitive handling (boolean, char, byte, short, int, long, float, & double)

- Optional values

- Lambda creation

Memory Management

First, we need to understand the overhead of creating and managing memory on the JVM. We’ll cover the main topics that apply to most garbage collectors in a general manner as that will be sufficient for our purposes.

The vast majority of objects are discarded shortly after creation so garbage collectors are tuned for this pattern. Creating an object on the JVM is about 10 times more efficient than lower-level languages like C++ because the JVM just bumps a pointer in the pre-allocated Eden space where new objects are created. When reaching the end of the Eden space, a minor garbage collection (GC) gets triggered which copies live objects from the Eden space into a much smaller survivor space, and the new-object pointer gets reset to the beginning of the Eden space. When the survivor space is full, live objects from the survivor space are copied into a backup survivor space, and the current survivor space becomes the new backup. Objects that last several rounds in the survivor space are promoted to a much larger tenured space to avoid being re-copied again in the future. A minor GC of the Eden and survivor spaces completes in just a few milliseconds despite managing 25% of a multi-gigabyte heap. This is because the vast majority of objects are no longer reachable when a minor GC is triggered so just a tiny fraction (< 1%) of the memory ends up being inspected and copied during this process.

Very large objects skip the copying process as they’re created directly in the tenured space since they’re expected to stick around for a long time. When the tenured region is low on space, either due to the creation of a very large object or due to a promotion from the survivor space, then a major GC is triggered. Major GCs take significantly longer to complete as most of the objects in the very large tenured space get shifted around to make room. Major GCs are the main reason why some JVM applications experience degraded performance or poor response times.

The main takeaway points are:

- Minor GCs are a normal part of a healthy JVM process. These are extremely quick and run on a regular basis even when using a small fraction of the total available heap.

- Creating small objects is extremely cheap on the JVM as it just bumps a pointer in a pre-allocated region of memory.

- Small short-lived objects add zero memory-management overhead as the garbage-collection overhead is only affected by live objects.

- Creating small objects earlier than needed or hanging on to their references longer than needed hurts throughput and response times. These objects are copied around a bunch of times as they flow through the GC process and also increase the rate of GCs.

- Creating very large short-lived objects on a regular basis ends up being very expensive as these are created directly in the tenured space so they’ll increase the frequency of major GCs.

Now we can resume the evaluation.

Primitive Handling

Although Kotlin doesn’t expose primitive types in its type system, it uses primitives behind the scenes when possible. For example, a nullable Double (Double?) is stored as a java.lang.Double behind the scenes whereas a non-nullable Double is stored as a primitive double when possible.

Streams have primitive variants to avoid autoboxing but sequences do not:

// Sequence

people.asSequence()

.map { it.weight } // Autobox non-nullable Double

...// Stream

people.stream()

.mapToDouble { it.weight } // DoubleStream from here onwards

...

However, if we capture them in a collection then they’ll be autoboxed anyway since generic collections store references. Additionally, if you’re already dealing with boxed values, unboxing and collecting them in a list is worse than passing along the boxed references so primitive streams can be detrimental when over-used:

// Stream

val testScores = people.stream()

.filter { it.testScore != null }

.mapToDouble { it.testScore!! } // Very bad! Use map { ... }

.toList() // Extra autoboxing because we unboxed themAlthough sequences don’t have primitive variants, they avoid some autoboxing by including utilities to simplify common actions. For example, we can use sumOf { deliveryPackage.weight } instead of mapping the weight and summing it in a separate step. These reduce autoboxing and also simplify the code.

When autoboxing happens as a result of sequences, this results in a very efficient memory-usage pattern. Sequences and streams pass each element through all sequence actions until reaching the terminal operation before moving on to the next element. This results in having just a single reachable autoboxed wrapper object at any point in time. In the worst-case scenario, just a single tiny wrapper object would be copied to the survivor space and all other previously generated wrappers would be discarded with zero extra overhead during the next minor garbage collection. The memory of these short-lived autoboxed objects won’t persist in the Eden or survivor spaces so this will utilize the efficient path of the garbage collector rather than causing major GCs.

All else being equal, avoiding autoboxing is preferred as there is a tiny overhead to create the wrapper objects and they add an extra layer of indirection. Therefore streams can be more efficient when working with temporary primitive values in separate stream actions. However, this only applies when using the specialized versions and also as long as we don’t overuse the primitive variants as they can be detrimental sometimes.

Optional Values

Streams create Optional wrappers when values might not be present (eg. with min, max, reduce, find, etc.) whereas sequences use nullable types:

// Sequence

people.asSequence()

...

.find { it.name.length > 5 } // returns nullable Person// Stream

people.stream()

...

.filter { it.name.length > 5 }

.findAny() // returns Optional<Person> wrapper

Therefore sequences are more efficient with optional values as they avoid creating the Optional wrapper object.

Lambda Creation

Sequences support mapping and filtering non-null values in 1 step and thus reduce the number of lambdas instances:

// Sequence

people.asSequence()

.mapNotNull { it.testScore } // create lambda instance

...// Stream

people.stream()

.map { it.testScore } // create lambda instance

.filter { it != null } // create another lambda instance

...

Additionally, most terminal operations on sequences are inline functions that avoid the creation of the final lambda instance:

people.asSequence()

.filter { it.age >= 18 }

.forEach { println(it.name) } // forEach inlined at compile timeTherefore sequences create fewer lambda instances resulting in more efficient execution due to less indirection.

Performance Overhead Conclusions

Streams have specialized primitive versions to avoid autoboxing when performing multiple transformations on primitive values. Sequences have some mitigation strategies to make unnecessary autoboxing less common. Additionally, the heap usage that’s caused by sequence autoboxing flows through the efficient path of garbage collectors. Nevertheless, streams can be more efficient for primitive transformations as long as they’re not over-used.

Note that the order of operations can have a significant impact on the number of autoboxing occurrences:

// Before

val adultAgesSquared = people.asSequence()

.map { it.age } // autobox non-nullable age

.filter { it >= 18 } // throw away some autoboxed values

.map { it * it } // square and autobox again

.toList()// After - No unnecesarry autoboxing

val adultAgesSquared = people.asSequence()

.filter { it.age >= 18 }

.map { it.age * it.age } // single autobox

.toList()

After looking at hundreds of real-life usages in a business setting, the vast majority are more efficient with sequences because they require fewer lambdas, they don’t wrap optional values, and most terminal operations are inlined.

Cautionary Note

Both sequences and streams should be avoided for performance-critical code. As an example, performing intensive linear algebra manipulations on large datasets is best without sequences or streams because the added indirection can have a significant impact on these types of CPU-intensive scenarios. In such a scenario, the solution would be to use traditional loops rather than applying operations directly on collections such as people.map { it.age }.doSomethingElse { }.

Applying operations directly on collections could appear to be faster than streams or sequences in microbenchmarks as those wouldn’t accurately measure the throughput and response time impacts of an application. Such patterns significantly affect the memory management overhead and performance of real-life applications as they create and hold onto entire temporary collections instead of operating on one element at a time. When a minor GC is triggered (either by the code in question or by other threads or coroutines), these temporary collections will be copied multiple times during minor GCs as they bounce around the survivor spaces. Even worse, the temporary collections might be promoted or created directly in the tenured space and increase the rate of major GCs.

Microbenchmarks can be misleading as they don’t measure the impact on the larger application so my recommendation is to avoid attempting premature optimizations and instead rely on regular computer science techniques like considering the worst-case memory and CPU impact using Big-Oh notation.

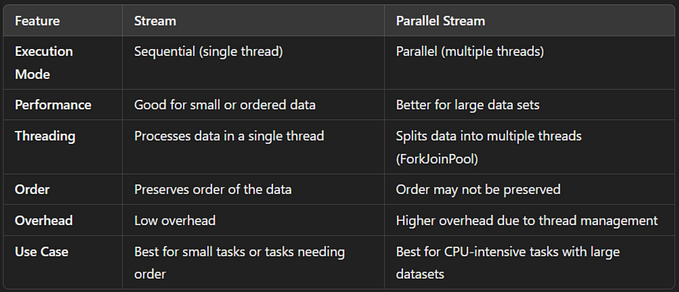

Overall Conclusions

No solution is best in all possible scenarios so I’ve summarized the results below.

Stream Advantages:

- Have primitive variants to avoid unnecessary autoboxing

- Enables easy parallelism via parallel streams (but note that these 3 articles recommend avoiding parallel streams: first, second, third)

Sequence Advantages:

- Don’t introduce platform types

- Use compile-time null-safety and mesh well with safe-call & Elvis operators

- Are shorter due to fewer operations

- Have simpler aggregates

- Have simpler terminal operations

- Don’t create wrapper objects for values that might be missing

- Create fewer lambda instances and most terminal operations are inlined resulting in improved efficiency due to less indirection

- Have a simpler mental model

Since sequences are better in most scenarios, I never use streams as it simplifies the decision process and keeps the code consistent. I’m also conscious of extra autoboxing when manipulating primitive values so I organize the code to minimize that.

Follow and subscribe to be notified of my next article.