Automating Code Reviews

Building software is a complex task. Some software is built by a single person, but often times there are teams behind software. These teams can range from two people up to hundreds of people. Working with many people in the same code-base brings quite a few challenges. Tools like git help out with working in parallel by enabling us to version our code (i.e. see changes over time) or to easily spot changes suggested by team members. But the more people are working on the same code-base, the more changes are being suggested — and these changes need to be reviewed by someone.

To ensure a consistent code quality, code review is a core part of a software engineer’s day. There are multiple aspects when reviewing code — more visual ones like conforming to a commonly defined code style (formatting, indentation, braces, …) but also logical aspects like the correctness of the code, usage of architecture patterns, performance, and more. To reduce the workload that reviewing code puts on the team, we can automate parts of the code review process. In the following we will take a look at adding automation to our code reviews with a focus on code style and static code analysis. The examples will be based on Kotlin (in an Android app) — but they are applicable to other projects as well, for example Swift, Dart or others.

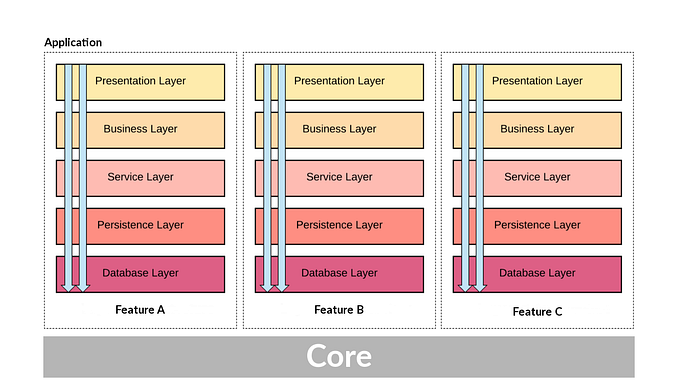

Working in a team means that decisions are made as a team. These decisions can be related to workflows, software architecture or just about the commonly used code style. Some of these decisions can be incorporated into the daily workflow — for example into the IDE via .editorconfig for formatting, or a commonly shared code style file that can be imported into the IDE. Many other decisions can’t be included and instead must be documented somewhere to keep track of them. This allows existing team members as well as new joiners to understand what decisions have been made by the team, and to adjust to them.

Taking decisions and writing them down is only one part though — these decisions need to be followed. This requires the team to be aligned and work into the same direction. Everyone on the team needs to be aware of them and willing to follow them. It’s easy to forget about certain things as well, and code review is usually the place where people are reminded of these things.

With a growing team and a growing number of pull requests (or merge requests), time spent in code review also grows. There have been many pull requests with most of the comments regarding code style or certain things that have been decided and written down before — just because someone was not aware of them or forgot about it. This costs a lot of time: Reading the code, focusing on the code style, spotting issues, writing comments which then are addressed. Often times issues are duplicated, resulting in duplicated comments and review work.

Lets take a look at how this can be automated.

The tools we will be using are:

DangerJS is the backbone of the setup, it will be triggered for each pull request and run a couple of checks on the changes.

Danger runs during your CI process, and gives teams the chance to automate common code review chores.

By writing a JavaScript file we can write a simple script that accesses the pull request (and its meta-data) and executes checks.

An example — checking if a pull request modifies a changelog file (CHANGELOG.YML):

If the author didn’t add the changes to the changelog (or technically: if there was no modification of the changelog file in the pull request), a review comment will be posted.

This alone is a quite powerful tool that can reduce the amount of time required for code review. It can be extended to fit certain workflows — for example making sure that the software version is increased before a release or to post warnings if business critical parts of the code-base are being touched.

DangerJS has full access to the checked out commit — both the source directories as well as the build output. This allows us to execute a couple of steps before triggering DangerJS in our pipeline, and to utilize the results.

Our goal is to automate parts of the code review process — especially the ones that are focused more on code style issues. So we will embed a linter and static code analysis into the pipeline.

Looking at a Kotlin code-base, the linter of our choice will be ktlint. For static code analysis we will be using detekt.

ktlint will make sure that the formatting of our code stays consistent — we follow the same indentation rules, variable naming, braces and much more. This will ensure that different parts of the code-base can be read in the same way and thus it’s easier to switch between them.

detekt will keep the code complexity low. It contains rules about concurrency usages, performance optimizations and general code structure.

To add both ktlint and detekt to our pipeline, and to enable DangerJS to use their reports, we need to enable XML reports. The DangerJS plugin is able to read checkstyle-formatted reports — meaning it is not limited to ktlint and detekt, but it can also read SwiftLint or other linters’ reports.

This example will be using GitHub actions to execute the CI pipeline — since DangerJS will be working closely with the pull requests, GitHub actions bring the benefit of supplying a GitHub actor and token out of the box.

The workflow for DangerJS on GitHub Actions looks like this:

This will install Danger and trigger it on pull requests. DangerJS will look for the configuration file (Dangerfile) and execute it. This could be used with the changelog modification script posted above.

The next step is to add the lint report plugin. Since it’s a different package, we’ll need to install it in the workflow as well.

Now we can adjust our DangerJS configuration (Dangerfile) and include our ktlint reports:

The last thing for us to do is to add ktlint to our GitHub Action. Since the lint reports are not committed, and thus not added to the repository, the file search for **/reports/ktlint/*.xml would not find any reports — and subsequently not be triggered.

The updated workflow file with a step to execute ktlint before Danger:

Opening a pull request will now trigger ktlint and then DangerJS — which will use the plugin we installed to scan for ktlint reports, parse them, and post issues that were created in this pull request as review comments.

The full Android setup, with ktlint, detekt and Android Lint would have a GitHub workflow triggering each of them:

With the following Dangerfile:

The result is that the team members no longer have to keep an eye out for mundane things like code style during the code reviews. This will save a lot of time long-term and ensure that the team’s own best practices are being followed.

To continuously improve this setup the rule-sets of each of these steps can be adjusted to perfectly fit the team’s desires. The next step would be to write custom rules (for each of these) to automate not just the code style decisions made by the team, but also rules about for example concurrency (in Kotlin this could be rules about Coroutine scope or context usages) or other more complex topics.